At WWDC 2018, the annual Apple Worldwide Developers Conference, which began on Monday, the next release of macOS, version 10.14 Mojave, was announced, bringing with it yet another round of new features and improvements. However, one thing has remained forever unchanged at its core: The user interface.

When will Apple — or the competition for that matter — finally “think different” and bring us the next evolution of the user interface that has seen the test of time and update it for the future of personal computing?

Beyond Eye Candy?

macOS 10.14 Mojave will include, among other things, enhancements to the Finder and Desktop, but the biggest change will be a new dark mode and that’s all fine and dandy, but if anything else, it simply seems like eye candy. Never mind the fact that Mac OS as version 10 is getting, well, a little long in the tooth, having been with us since the turn of the century! Apple needs to “wow” us with a totally new version of its operating system (version 11?), – but better yet?

The new dark mode in macOS 10.14 Mojave. (Photo: Courtesy of Apple)

Allow me to let someone else step in with that answer.

In a December 2001 opinion piece by writer Stephen Van Esch here on Low End Mac, the topic of the operating system and its interface was discussed. While Apple and Microsoft continue to tweak features in each OS release with one copying the other and vice versa, nothing else has really changed.

Is Change Inevitable?

Van Esch wrote, “One problem is the atrophying of innovation in the OS space. Clicking icons and moving files about hasn’t changed since Apple introduced the first Mac OS in 1984.”

He also wrote, “What Apple needs now, more than ever, is to redefine the OS landscape. … When I say redefine the OS landscape, I mean that Apple has to come up with the next generation operating system, not an operating system you see and use today, but whatever will supersede what we see and use today.”

“And it’s coming. Maybe not now or next year or even within the next decade, but eventually someone smarter, more visionary and more innovative than you or I will come up with the next interface between computers and humans. With any luck, that person will be Apple’s staff,” wrote Van Esch.

So what could, or should, that new operating system interface be?

Option 1: Touch-Enabled

The iPhone X. (Photo: Courtesy of MockupWorld)

It has been more than three decades of point-and-click with a mouse or trackpad on Mac OS. The most logical step forward would be a touch-enabled user interface with the touchscreens on our smartphones and tablets being ubiquitous since Apple reinvented the smartphone in 2007 and brought with it iOS – and shortly thereafter Android copying and following suit.

I have opined many times over here on Low End Mac and on its Facebook group that I look forward to the day that Mac OS utilizes a touch-enabled user interface whether fully or in hybrid form.

For me, it’s more out of pure necessity than an outright want. If you already didn’t know from reading my many articles that I am visually impaired and totally blind, my reason for wanting Mac OS to become touch-based is because, as I have also said before, I have found that using VoiceOver (the built-in screen reader software from Apple) under iOS a much more intuitive and easier to use interface than with the same experience with Mac OS. Thus, my iOS devices have replaced my actual computers and become my primary computing hardware.

Option 2: Voice-Activated

Another possibility already present in modern computing is the voice-activated digital assistant.

Scotty, played by the late actor James Doohan, unfamiliar with the computing interface of the 1980s having traveled back in time, attempts to talk to a Mac Plus mouse in order to operate the computer in the 1986 film Star Trek IV: The Voyage Home. (Photo: Courtesy of Paramount)

Our own Dan Knight, the publisher of Low End Mac, mentions this fact in a recent update to Van Esch’s article where he wrote, “I would posit that the next generation interface is voice control. In 2018, we have Siri from Apple (2011), Alexa from Amazon (2014), Cortana the one in Windows that nobody is talking about (also 2014), and Google assistant (2016) to talk to and respond back by speaking.”

I would agree with Knight because, in a way, touch control has been with us for quite awhile as well. It was first invented, believe it or not, in the 1940s and did not become feasible until 1965 as reported by Ars Technica in a piece about the history of the touchscreen.

They even had a nod to the original Star Trek series, which debuted in 1966, that demonstrated the technology in the show but did not specifically mention the technology by name until the sequel, Star Trek: The Next Generation in 1987. If you are a Trek fan like me, you also know that in addition to the touch enabled interface found on every starship is voice activated control. A memorable and humorous scene that comes to mind is that of the character Scotty in 1986’s Star Trek IV: The Voyage Home where he attempts to operate a computer (which happens to be a Mac Plus) using voice control by holding a mouse and speaking commands into it being unfamiliar with the current technology of the time period he and the USS Enterprise crew have traveled back in time to.

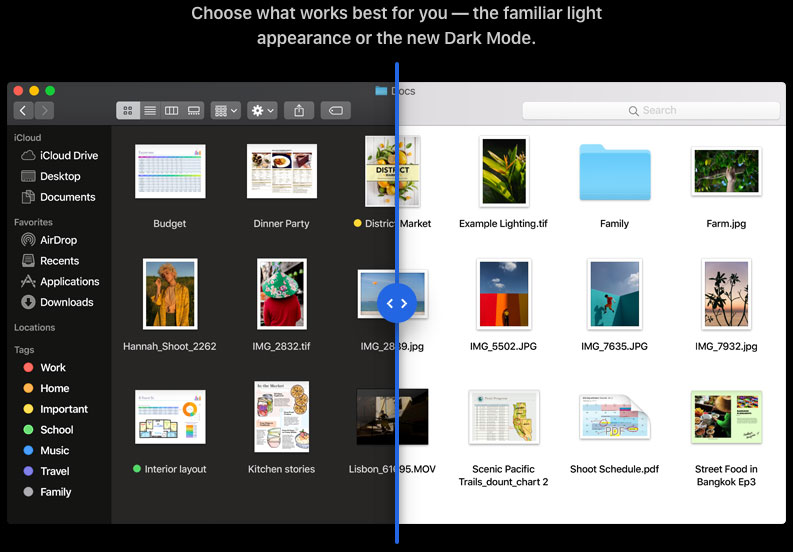

The Apple Newton MessagePad with a stylus. (Photo: Courtesy of Apple)

Even with Apple, the first device that employed the touchscreen interface was with their 1992 PDA, the Newton MessagePad which was a device ahead of its time and back then, you needed a stylus to operate the touchscreen display. That was until, of course, Apple revolutionized the concept with the iPhone in 2007 by simply using, as the late Apple CEO Steve Jobs put it, “the best pointing device in the world,” a pointing device that we’re all born with: our fingers.

Option 3: Eye-Tracking

One user interface that is more recent and seemingly even more ahead of its time is eye tracking technology.

In a 2011 article on Digital Trends titled “Death of the Mouse: How Eye-Tracking Technology Could Save the PC” written by Jeffrey Van Camp, the author delves into the touch-based interface, the tried and true keyboard and mouse, motion sensors, and the fourth, eye tracking technology.

Van Camp wrote, “In the last few years, touch control has revolutionized the way we interact with mobile devices. The technology has been so popular on smartphones that Apple used its proven touch approach to reinvent the dead tablet market with the iPad.”

“With all of these motion-controlled interfaces for video game systems and touch interfaces for mobile devices, the PC with its keyboard and mouse just feels, well, old.”

He continued writing that the mouse and trackpad are a “step removed” from the natural feel of using motion sensing devices like the Nintendo Wii game controllers or the Xbox Kinect or touch controls of tablets such as an iPad.

Eye tracking illustration from Tobii Technologies. (Photo: Courtesy of Tobii)

But he goes a step further and brought the discussion of eye tracking technology created by the Swedish company Tobii Technologies.

The technology — installed in 20 prototype Lenovo laptop computers with built-in infrared sensors — tracked eye movement so precisely and quickly, which Van Camp tested out for himself and felt the best mouse interfaces were antiquated by comparison. He tried out the eye-tracking technology on the prototype laptops with tasks such as reading, playing media, gaming, multitasking, and zooming/panning (like with maps).

“The fluidity of the experience reminded me of the first time I used the iPod touch and how natural it felt to swipe and touch precisely where I wanted. Before the (iPod) touch and iPhone, most touchscreens used resistive touch technology which required you to actually press down on the screen. These screens demanded a stylus pen-like device to achieve precision but Apple changed the game with its more natural interface that let your directly use your fingers,” wrote Van Camp.

He added, “Tobii’s eye control technology is as direct as any touch interface. It feels like touch from afar.”

So between the three of us — myself saying it is touch enabled, Knight saying it is voice activated, and Van Camp saying it is eye tracking — which control interface is the right one?

The Finger is the ‘Mobile King’

An outsider who can answer this debate (although Digital Trends’ Van Camp is also a third party in this commentary) is Jason Cipriani of Fortune magazine who, in a 2014 article on fortune.com wrote that Steve Jobs was right and that there’s still no better mobile computing tool than your finger.

Cipriani tested out every interface discussed in this article so far – from the keyboard, speech activated digital assistants, eye tracking technology, to even the stylus. His verdict is obvious.

“Every day we interact with countless electronic devices without giving a thought as to how we’re doing it. … You likely use a desktop or laptop most working days. You also likely no longer think about keyboards, mice, or touchpads. These interfaces have been worked out over years if not decades and have changed little,” wrote Cipriani.

He continued, “Yet each time I pick up a smartphone, I am subconsciously forced to make a decision on how to interact with it. If I want to know if my calendar is free, do I use my thumbs or my voice? When I send a text message, should I tap or use a series of swipe gestures and hope the underlying software can decipher my scribbles? Is this task best suited to a stylus? Is my gaze really the ideal indicator that I want a webpage to scroll? After less than a decade of existing, smartphones have presented us with a wealth of options.”

“With so many different systems available, I got to thinking: ‘are manufacturers truly on to something here or are they just throwing mud at the wall?’ It’s equally and frustrating to have several options for how to interact. So I started to experiment, step outside my comfort zone, and use every input method I could get my hands or eyes on. I wanted to know what the best way to use these devices is, and whether it says anything about the increasingly mobile world we now find ourselves in.”

Of note here in regard to eye-tracking technology, three years had passed since Digital Trends writer Van Camp tested out the interface with prototype machines but by 2014, Samsung had incorporated the technology (unknown if from Tobii Technologies) into their smartphones, and Cipriani considered it creepy, a gimmick, and its functionality extremely limited – as he wrote, “…it’s not going to change the principal way you interact with a mobile device.”

Of course, as I already said, we know what Cipriani deduced even after all the testing of interfaces he went through.

“Software developers have devised a number of clever ways to interact with your smartphone or tablet. It turns out that the most effective method for the majority of cases is your grubby, inaccurate, clumsy digit, a tool you’ll always have with you.”

Physical Keyboards Still Have a Place

Apple wireless keyboard and mouse. (Photo: Courtesy of Apple)

Cipriani closed with, “As I finish writing this column using a hardware keyboard plugged into a tablet computer, I’m reminded that more than a century after the QWERTY keyboard was invented and four decades after the mouse was developed, they are still the dominant ways we use desktop computing devices. We use them because, strange as they are, they’re what works best for most applications.”

“The spate of alternate input technologies for mobile devices are novel, artful, even useful in certain cases, but they’ve been most useful in showing us that the finger is still the mobile king.”

Mobile Devices Are Quietly Displacing Desktop Computers

Granted, Cipriani focused on mobile computing but as we all know, nowadays, our smartphones and tablets are increasingly becoming – like in my case – our everyday computing devices and slowly replacing or supplanting the need for a desktop computer.

With Apple’s iOS the first to pioneer and revolutionize the touch-based interface that we simply control with our fingers, the next logical step by Apple would be to incorporate the touch interface into macOS and once again transform and reinvent the future way we interact with our computers.

Never mind that Apple CEO Tim Cook has previously denied a merger of Mac OS and iOS, and Apple Senior Vice President of Software Engineering Craig Federighi reiterated it again on Monday when presenting macOS 10.14 Mojave at WWDC 2018.

Touch Reaches the Widest User Base

The way I see it (not physically, of course), of the three alternate interfaces, touch control reaches a wider user base with the exception of those who do not have the use of their hands. Voice control excludes the hearing impaired and those who have speech impediments. Eye control excludes the visually impaired.

To be fair, maybe having all three technologies available along with the keyboard and mouse/trackpad will allow the user to choose the best interface suited to their needs for whichever application is being used or task being performed at the time and will also address the needs of those with disabilities.

Whatever new interface comes to the forefront of personal computing, for Van Esch, who wrote that this new interface won’t debut in the next decade, that piece was written 17 years ago. With any luck he just may get his wish and we may see an operating system from Apple with a new interface (especially with talk of Apple moving chip production in-house in two years with processors that were designed to best run a mobile operating system such as iOS) come the turn of the next decade in 2020.

keywords: #operatingsystem #userinterface #eyetracking #voiceactivated #touchbased #touchenabled

short link: https://wp.me/p51SSp-d7N